SQLAlchemy is arguably the most powerful and ubiquitous ORM framework for Python.

At Oursky, we have been using SQLAlchemy for quite a period of time and appreciated the flexibility and elegance it provides over the Data Mapper abstraction. No doubt, it works very well for modern web applications but what about long-running background jobs? Would the abstraction get in your ways? (tl;dr: yes, but we still prefer it)

Here are some hands-on experiences from us.

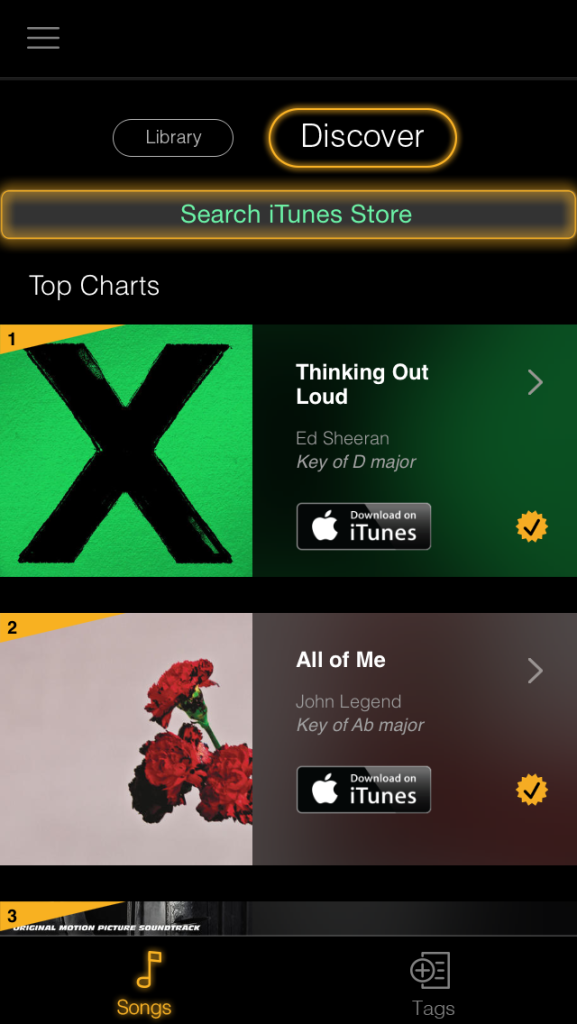

We built a popular iOS application with a song recommendation system at the backend. The system suggests a top list for 20 popular songs.

Previously our editors hand-picked popular songs by download count and gather a new playlist as a recommendation to users. Now, we want to automate this process and generate the playlist weekly.

Obviously it can be a cron job.

We decided to write a python script for it. The flow follows:

- Get the top 20 songs (across 100k+ analyzed songs)

- Create a new playlist and insert it into the database

- Run the above script weekly

Easy! It is a good exercise to get new python programmers familiar with SQLAlchemy. One of our colleagues, not having written a single line of Python, submitted the following piece of code for review:

def gen_autotrend_playlist(playlistdate):

r = get_redis()

song_ids = get_trending_song_id(r, playlistdate)

if not song_ids:

return

new_playlist = Playlist(name='Top Charts',

ordering=1,

available=0,

admin_available=1,

slug='topchart-week-' + playlistdate)

db.session.add(new_playlist)

db.session.commit()

for i, song_id in enumerate(song_ids):

new_entry = ListedEntry(song_id=song_id,

playlist_id=new_playlist.id,

ordering=i,

is_top=1)

db.session.add(new_entry)

db.session.commit()

The code speaks for itself very well: crawl the most trendy songs from Redis and store them into Database one-by-one.

Impressive code for a beginner. Good job, Peter.

That being said, sharp-eyed readers should have probably noticed something very wrong here. Can you suggest from the code above?

(Hint: about database consistency)

Time’s up. Let’s look at the loop overs song_ids:

for i, song_id in enumerate(song_ids):

new_entry = ListedEntry(song_id=song_id,

playlist_id=new_playlist.id,

ordering=i,

is_top=1)

db.session.add(new_entry)

db.session.commit()

If the operation crashes in the middle of the loop, say, the 5th song, the script will throw an exception and exit.

Boom! Your supposedly 20-song playlist now gets only 4 songs.

Worse, it might even throw an exception in the very first iteration and leave you with a playlist without any songs. That’s bad. Really bad.

So, how should we deal with this problem? Here Peter resubmit a patch:

def gen_autotrend_playlist(playlistdate):

r = get_redis()

song_ids = get_trending_song_id(r, playlistdate)

if not song_ids:

return

new_playlist = Playlist(name='Top Charts',

ordering=1,

available=0,

admin_available=1,

slug='topchart-week-' + playlistdate)

db.session.add(new_playlist)

db.session.flush()

for i, song_id in enumerate(song_ids):

new_entry = ListedEntry(song_id=song_id,

playlist_id=new_playlist.id,

ordering=i,

is_top=1)

db.session.add(new_entry)

db.session.commit() # Only commit when everything is done correctly

Here is a major question by beginners: we want a playlist_id to associate the record with the new playlist. So we considered calling db.commit() before the insertion loop which might lead to inconsistency.

That’s what session.flush() might help:

session.flush()communicates a series of operations to the database. The database maintains them as pending operations in a transaction.The changes aren’t persisted permanently to disk, or visible to other transactions until the database receives a commit for the current transaction.

The flush operation makes the new_playlist in a pending state, so we can query for the assigned id within this session.

The insertion loop does its job as usual, and we finally commit it at the end of the whole operation.

We can even do better: (by using relationship() provided by SQLAlchemy)

class ListedEntry(db.Model):

song_id = db.Column(db.Integer,

db.ForeignKey('song.id'),

primary_key=True)

playlist_id = db.Column(db.Integer,

db.ForeignKey('playlist.id'),

primary_key=True)

playlist = db.relationship('Playlist', backref='listed_entries') #Declare a relationship

def gen_autotrend_playlist(playlistdate):

r = get_redis()

song_ids = get_trending_song_id(r, playlistdate)

if not song_ids:

return

new_playlist = Playlist(name='Top Charts',

ordering=1,

available=0,

admin_available=1,

slug='topchart-week-' + playlistdate)

db.session.add(new_playlist)

for i, song_id in enumerate(song_ids):

new_entry = ListedEntry(song_id=song_id,

playlist=new_playlist,

ordering=i,

is_top=1)

new_playlist.listed_entries.append(new_entry)

db.session.commit()

We can further save an flush operation while maintaining the database consistency.

Lesson 1

Consider what shall be in a transaction!

“Hear that, Peter?”

Next, we want to add two columns to DB for query and a planned feature

distinct_chords: the unique chords appeared in a song (JSONText)chord_num: the number ofdistinct_chords(int)

We need a migration script to generate these two fields. Once again, our Fearless Peter has taken up the challenge:

def batch_derive_song_chord:

for song in db.session.query(Song).all():

chords = derive_chord(song.score)

song.chords = chords

song.chord_num = len(chords)

db.session.commit()

“Look, ma! One commit and for all!” Yea Peter… You did learn something. It runs flawlessly on you development environment, but the whoever runs it on production would probably get angry at you.

Could you find out the reason for Peter?

3… 2…1.

Here is the solution:

# Solution

def batch_derive_song_chord:

for song in db.session.query(Song).all(): # Hold all 100k ORM objects in memory?

chords = derive_chord(song.score)

song.chords = chords

song.chord_num = len(chords)

db.session.commit() # Committing 100k rows at once?

- An

query.all()attempts to fetch all records from the Database, does the ORM work and return them as a list. It would be a disaster if your table is huge. - It commits after all songs processed. That means: one fails, all fails. Let’s imagine the 99k-th song of 100k songs has a corrupted score and derive_chord throws an exception… Congratulation! You are all new now and have to re-process 99k songs.

For this kind of script, we have to make it more error-tolerant and re-runnable. It is obvious that the processing on a single song is a unit of work that can be committed atomically. A naively modified version would be like this:

def batch_derive_song_chord:

for song in db.session.query(Song).filter(Song.chords == None):

chords = derive_chord(song.score)

song.chords = chords

song.chord_num = len(chords)

db.session.commit()

If the script fails and terminates in midway, we can still guarantee the work we’ve done is not wasted.

Lesson 2

1. Prefer iterator over list in python, and avoid

query.all()unless you have a reason (if you need to, write a comment about it!)2. Break gigantic commit into multiple smaller atomic commits. Try not to do long-running operation between `flush` and `commit` because some rows may be locked in the mean time.

3. Make script re-runnable and traceable even it breaks midway.

One more thing…

Let’s re-visit the modified version of migration script:

def batch_derive_song_chord:

for song in db.session.query(Song).filter(Song.chords == None):

chords = derive_chord(song.score)

song.chords = chords

song.chord_num = len(chords)

db.session.commit()

There is an opaque performance issue here. Could you spot that out?

Answer: The session used for this query is being committed at every iteration. Since objects in a session are expunged every time committed, queried object will be re-fetched at the next iteration. It results in all songs but the first is fetched twice, which is a huge waste. (Peter: Ha! I am not that bad after all, right?)

We dealt with this problem by using separate sessions for read and write such that our commits of writes do not mess with the session for the query. An example snippet follows:

def batch_derive_song_chord: # steal the session_factory for illustration purpose session_factory = db.session.session_factory read_session = session_factory() write_session = session_factory() for (song_id, ) in read_session.query(Song.id).filter(Song.chords == None): song = write_session.query(Song).get(song_id) chords = derive_chord(song.score) song.chords = chords song.chord_num = len(chords) write_session.commit()

Session management complicated the script a bit, but overall it is still a very readable piece of code (what about RAW SQL anyone?)

To sum it up

- SQLAlchemy provides a very good abstraction and aids in delivering highly readable code.

- Think more on when you should commit. Using SQLAlchemy in a long-running script is very different from web application where in most cases session is only committed once per request. Expect your script to fail, define the unit of work for your purpose and commit your changes atomically.

- Extra care should be taken towards session life cycle to optimize performance.

Overall we are satisfied with using SQLAlchemy in our long running scripts despite a few pitfalls of it. With SQLAlchemy, scripts are easier to write than raw-SQL counterpart, and more importantly, easier to read.

It is trivial for scripts with sophisticated routine logic. Like it or not, not all batch scripts are simple enough to write in 100 LOC, and they have to be maintained.

Life is too short for bad code.

“20 percent of the code has 80 percent of the errors. Find them, fix them!” – Lowell Arthur

We care about code quality and maintain high code quality standards through code review and regularly update with new techniques and best practices.

Subscribe and bookmark our blog if you find this post helpful.

Learn more about us here and follow @Oursky on twitter.

If you find this post interesting, subscribe to our newsletter to get notified about our future posts!

7 comments

Hi! I could have sworn I’ve been to this website before but after reading through some of the post I realized it’s new to me.

Anyways, I’m definitely delighted I found it and I’ll be bookmarking and checking back frequently!

Thanks for your love 🙂 glad you enjoyed it.

Excellent post. I was checking continuously this blog and

I’m impressed! Extremely helpful info specially the last part 🙂 I care for such

information a lot. I was seeking this particular information for a long

time. Thank you and good luck.

Here is my blog post – Castaic T Shirts

I love reading through an article that will

make people think. Also, thank you for allowing me to

comment!

Hello,

It seems like you have a mistake in the last snippet of code: `song = write_session.get(song_id)` won’t work since Session object has no attribute ‘get’. Perhaps you wanted to write `song = write_session.query(Song).get(song_id)`?

Ya! You are right ! Thanks for you comment. The post has been updated~ ^^

nice write up.

I work for Nielsen and do a lot of backend batch oriented processing. I’ve seen my share of shell scripts that mash pages and pages of SQL into a string and then pass it to the command line SQL processor… and I’m constantly thinking there must be a better way.

one suggestion, I would not to commit every iteration of the loop. Instead implement some logic that commits after every 100 or 500 songs — thinking something like:

ctr += 1

if ctr % 100 == 0:

write_session.commit()