In this article, we’ll look into how addresses and names can be parsed from physical documents, like a letter or bank statement. We’ll assume that the text/content is already pulled from document images through optical character recognition (OCR) services. Our focus here is how to obtain the address and name from these unstructured strings, in scanned forms/document.

Address and name are in fact closely related. Often, parts of a name are seen in a street address. Here’s a wiki list of roads named after people to visualize the statement a bit better.

Regular expressions (regex) naturally come to mind when searching in strings. So, let’s kick-start with some of them that match address and names! Below we have some code snippets parsing text in Python as examples.

Disclaimer: Only instances represented by English alphabets are considered here.

Name Regex

We’re first discussing name regex intentionally, just because the it often includes a human name. It’d be clearer for you if we talk about names first.

The regex here can be applied to a first or last name text field. We’ll focus on a data field for human name and ignore details differentiating first names and surnames.

The pattern in more common names like “James,” “William,” “Elizabeth,” “Mary,” are trivial. They can be easily matched with . How about those with more variations? There are plenty of languages with different naming conventions. We’ll try to group different types of names into basic groups:

- Hyphenated names, e.g., Lloyd-Atkinson, Smith-Jones

- Names with apostrophes, e.g., D’Angelo, D’Esposito

- Names with spaces in-between, e.g., Van der Humpton, De Jong, Di Lorenzo

Carry on reading to see how text extraction can be done with a regex.

Regex

This regex should do the trick for most names:

r'^+$'

Code Snippet

You can sub in other values in the test list to see if they can be matched against this regex:

Address Regex

For geographical or language reasons, the format of an address varies all over the world. Here’s a long list describing these formats per country.

Since address format is too varied, it’s impossible for a regex to cover all these patterns. Even if there is one that manages to do so, it’d be very challenging to test, as the testing data set has to be more than enormous.

Our regex for address will only cover some of the common ones in English-speaking countries. It should do the trick for addresses that start with a number, like “123 Sesame Street.” It’s from this discussion thread where it received positive feedback.

Regex

^(\d+) ?((?= ))? (.*?) ([^ ]+?) ?((?<= )APT)? ?((?<= )\d*)?$

Code Snippet

Limitations of Using Regex to Extract Names and Addresses

Dealing with Uncommon Values

While these regexes may be able to validate a large portion of names and addresses, they will likely miss out on some, especially those that are non-English or newly introduced. For example, Spanish or German names weren’t considered thoroughly here, probably because the developer wasn’t familiar with these languages.

No Pattern to Follow

Regex works well against data that has a strict pattern to follow, where neither name nor address belongs to a category. They’re ever-changing, with new instances created every day, along with a massive variation. Regex isn’t really going to do a good job on extracting them. In short, they are not “regular” enough with no intuitive patterns to follow.

Unable to Find the Likeliest Name

Regex also lacks the ability to differentiate to find the “most likely” name. Let’s take a step back and assume there’s a regex R that can extract names flawlessly from documents that scanned via an OCR data extraction service. We want to get the recipient’s name (from a letter from Ann to Mary):

Dear Mary,

How have you been these days? Lately, Tom and I have been planning to travel around the World.

...

...

...

Love,

Ann

There are three names in the letter — Mary, Tom, and Ann. If you use R to find names, you’ll end up with a list of the three names, but you won’t be receiving just Mary, the recipient.

So, how can this be achieved? We can give each name a score based on:

- Its position on the document

- How “naive” it is (i.e., how often it appeared in a training data set)

- Likelihood of a name to be the single target from a training data set

Unable to Differentiate Name and Address

On paper, names and address can be the same thing. “John” can be a name, or it can also be a part of an address, like “John Street”. Regexes don’t have the capability to see this difference and react accordingly. We surely don’t want to have results “Sesame Street” as a name and “Mr. Sherlock Holmes” as a street address!

A Quick Sum up

Do bear in mind that using regex for name validation isn’t really a good idea, as new names are generated at a rate that a regex won’t be able to keep up to. Regexes won’t be able to extract address from text accurately as well, due to the same reason.

No need to worry though! Carry on reading, we got you covered!

Classify Named-Entity with BERT

What we have here is a named entity recognition (NER) problem, where we’ll have to locate then obtain names and addresses from a bunch of unstructured string (from documents that use OCR).

We assume you have the technical know-how behind some core machine learning and neural network concepts. We’ll focus on our flow to recognize target named entities {name}, {address}, and {telephone number}. Yep, apart from name and address, telephone number is also our target. We didn’t provide a regex for that because its format varies in length and geographical code.

If you find the definitions or technical details confusing, we’ll provide in-place links for your convenience to get to speed with background knowledge.

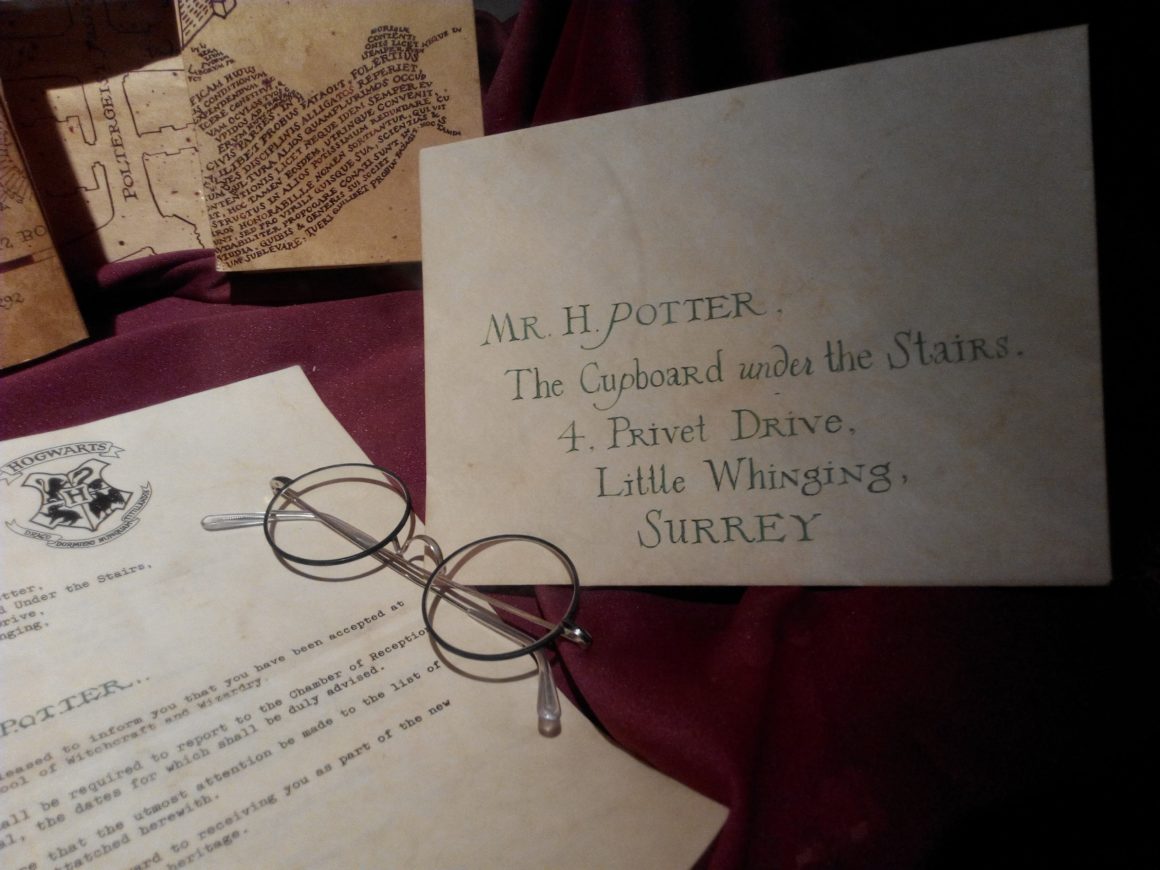

Example: Acceptance Letter for Harry Potter

We’ll use an acceptance letter from Hogwarts to Harry Potter as an example throughout this part. Our target is to get all names and the receipt address from this letter.

Mr. H Potter The Cupboard under the Stairs, 4 Privet Drive, Little Whinging, Surrey Dear Mr Potter, We are pleased to inform you that you have been accepted at Hogwarts School of Witchcraft and Wizardry. Please find enclosed a list of all necessary books and equipment. Term begins on 1 September. We await your owl by no later than 31 July. Yours sincerely, Minerva McGonagall Deputy Headmistress

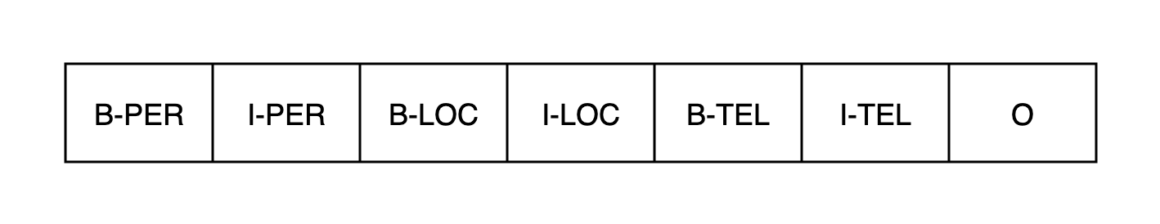

Output: Tagged Tokens with IOB

Our output will be a list of tagged tokens using the inside-outside-beginning (IOB) format. Our targets are {name}, {address}, and {telephone number}, so here we have seven tags — beginning (B) and inside (I) for each target, and outside (O), meaning a token isn’t what we’re looking for. LOC, which stands for location, is just the address in this case.

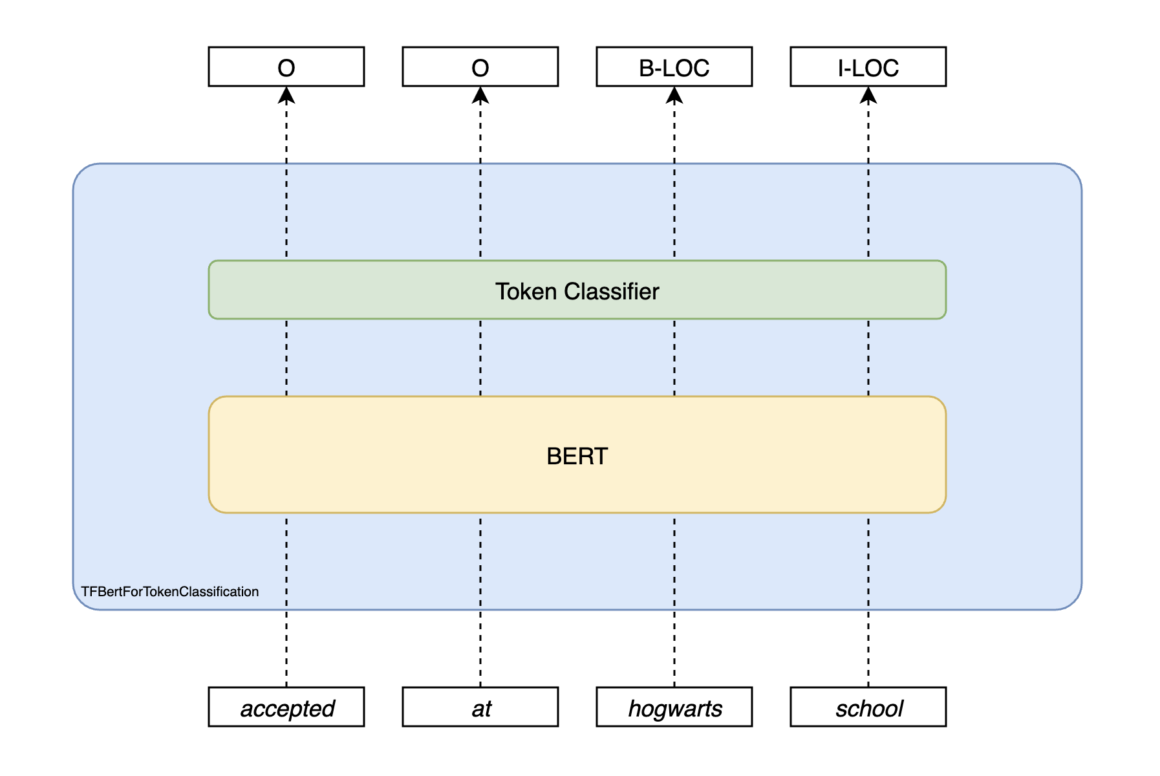

Token Classifier on Top of Bert

Bidirectional Encoder Representations from Transformers (BERT) is quite popular these days due to its state-of-the-art performance in handling Natural Language Processing (NLP) tasks. BERT is a sequence-to-sequence, transformer-based feature extractor, whose output of embeddings can be passed to a downstream classifier for further processing. If you’re not familiar with what BERT is and how it works, check out this amazing article.

The recommended approach to an NER issue is to add a token classifier on top of BERT (which is pre-trained when you download it). We can architect our solution following that, as shown below:

Our code was implemented with classes provided by Huggingface. Text sequences are first fed to a PreTrainedTokenizer before the tokens are passed through TFBertForTokenClassification. Our transformers were developed with TensorFlow.

TFBertForTokenClassification includes an underlying pre-trained BERT model, and a top token classifier processing the embeddings incoming from below. As suggested in Huggingface’s documentation, TFBertForTokenClassification is created for Named-Entity-Recognition (NER) tasks.

The output of each input token, say that of “Hogwarts,” is a logit of our seven tags {B-PER, I-PER, B-LOC, I-LOC, B-TEL, I-TEL, O}. The logit represents how likely the token belongs to a tag. Post-processing functions are applied to the logit, before the final tagging result of a token is obtained via an ARGMAX function.

So the sequence, [“accepted”, “at”, “hogwarts”, “school”] will result in a corresponding tag list [“O”, “O”, “B-LOC”, “I-LOC”]. We should end up with the address words, “The Cupboard under the Stairs…”, all tagged as “I-LOC”, except the first being a “B-LOC”.

For name tokens like [“Mr”, “Potter”], they should end up with these tags — [“B-PER”, “I-PER”].

Our main effort for developing this solution was heavily invested in two parts. First, fine-tuning with synthetic data sets. Second, differentiating tags with post-processing functions. For configurations and training on the Huggingface, we mostly followed their guide.

Pseudo Code

for text_chunks in input:

regroup words in text_chunks so that they don’t get separated during the tokenization stage

for word in regrouped_text_chunks:

tokenize the word

classify a token to obtain a logit

Fine-Tuning with Synthetic Data Sets

The TFBertForTokenClassification was developed from more general data. To boost its classification accuracy on parsing address, name, and telephone number from our data set, we fine-tuned it with our own data sets.

Since we’re looking to get the targets from letters such as bank statements, bills, and invoices, we collected a large of amount of them and fed it to the model for fine-tuning. If you’re looking to construct a BERT model for some NER task on some specific text formats, prepare fine-tuning data sets accordingly!

Differentiate Tags with Post-Processing Functions

As we covered earlier, addresses and human names are so similar that, at times, it’s hard to tell which is which. The issue we faced was that often, a token has a high likelihood of being both a location (i.e., address) and a person’s name.

To separate them in these cases, we implemented several measures.

Look by Keywords

An address usually contains location or street keywords like “road”, “block”, “shop”, or “level. That’s a big indicator on a token being an address.

A name often comes with a title like “Mr” or “Ms”. So when we find them near the token, it’s likely that the token is a name. Let’s take the signature in the acceptance letter as an example:

Minerva McGonagall Deputy Headmistress

“Minerva McGonagall” has to be a name, as indicated by her position, “Deputy Headmistress,” at Hogwarts. Without her position, it’d be hard to tell. “Minerva McGonagall Street” sounds quite natural to me!

These keywords can be used to mark down a token as well. Let’s say the current token has the highest probability of being a name, but there’s a word “street” right next to it. In this case, the score of this token being a name gets marked down.

Word Length

We also review the logits by the word’s length. If a word has a length of 12, it’s quite likely to be an address instead of a human name. Long names aren’t really that rare though. After all, we’re looking at likelihood here.

Model Trained for You, Out of the Box

Even if you are familiar with machine learning concepts, enormous amount of effort will be required to collect enough and good-enough data for fine-tuning/training purposes. You’d also need to learn the patterns (not obvious at times) between input tokens in order to write correct and effective post-processing logics.

Some good news. The classifier we have just covered is actually available to everyone, since its a feature of our very own data extraction service FormX. Name and address parsers can be applied to:

- document-level under Auto Extraction Item,

- only a selected region on documents, under Detection Region

Sign up a free account and try it out, along with a vast range of other functions!

Address and Name Regexes Are Not Good Enough for Data Extraction

So, we’ve covered regular expressions here as a shorthand way for getting names and addresses. You’d think they’d allow you to parse all possible strings, but, Houston, we have a problem. Regexes have inherent inabilities to deal with edge values, rank candidates by likelihood, and distinguish between values.

Consequently we adopted a BERT based AI model as a way to overcome regex’s limitations. You’ll need to leverage machine learning- and NLP-based capabilities, as well as prepare a bunch of data if you choose that path. The research and development (R&D) stage takes serious amount of time, therefore do think thoroughly before you make the decision. Always consider their trade-offs when you come across these options — their complexity, accuracy, and maintainability.

That’s the end of this article, do you have other solutions towards this problem in mind? Feel free to let us know your thoughts by commenting below! Cheers and see you next time. 🙂